Tech CEO Summit at US Senate: Elon Musk Calls AI Double-Edged Sword

Elon Musk said AI can be a tremendous source for good but warned about risks to civilization, according to a person in the closed-door session.

View all Images

View all ImagesElon Musk called artificial intelligence a double-edged sword, telling US senators Wednesday that the technology can be a tremendous source for good but warning about risks to civilization, according to a person in the closed-door session. The billionaire owner of X, formerly known as Twitter, was among more than 20 tech and civil society leaders attending the summit focused on AI.

Musk, the chief executive officer of Tesla Inc., told senators they shouldn't be worried about self-driving cars, for instance, but instead should focus their concerns on what he called deeper AI, the person in the room said. Deep AI is an apparent reference to deep learning, a type of artificial intelligence that teaches computers to process data in a way that imitates the human brain.

Musk, who spoke off-the-cuff, particularly raised concerns about data centers so powerful and big that they could be seen from space, with a level of intelligence that is currently hard to comprehend, the person said.

Among projects started by Musk, the world's richest person, is an AI company, xAI.

On China, Musk recounted his earlier trip to the nation and said he raised the risks of deep AI and super intelligence with senior officials there.

Tech titans are giving senators advice on artificial intelligence in a closed-door forum

WASHINGTON (AP) — Senate Majority Leader Chuck Schumer has been talking for months about accomplishing a potentially impossible task: passing bipartisan legislation within the next year that encourages the rapid development of artificial intelligence and mitigates its biggest risks.

On Wednesday, he convened a meeting of some of the country's most prominent technology executives, among others, to ask them how Congress should do it.

The closed-door forum on Capitol Hill included almost two dozen tech executives, tech advocates, civil rights groups and labor leaders. The guest list featured some of the industry's biggest names: Meta's Mark Zuckerberg and X and Tesla's Elon Musk as well as former Microsoft CEO Bill Gates. All 100 senators were invited; the public was not.

“Today, we begin an enormous and complex and vital undertaking: building a foundation for bipartisan AI policy that Congress can pass,” Schumer said as he opened the meeting. His office released his introductory remarks.

Schumer, who was leading the forum with Sen. Mike Rounds, R-S.D., will not necessarily take the tech executives' advice as he works with colleagues to try and ensure some oversight of the burgeoning sector. But he is hoping they will give senators some realistic direction for meaningful regulation of the tech industry.

“It's going to be a fascinating group because they have different points of view,” Schumer said in an interview with The Associated Press before the event. “Hopefully we can weave it into a little bit of some broad consensus.”

Tech leaders outlined their views, with each participant getting three minutes to speak on a topic of their choosing.

Musk and former Google CEO Eric Schmidt raised existential risks posed by AI, Zuckerberg brought up the question of closed vs. “open source” AI models and IBM CEO Arvind Krishna expressed opposition to the licensing approach favored by other companies, according to a person in attendance.

There appeared to be broad support for some kind of independent assessments of AI systems, according to this person, who spoke on condition of anonymity due to the rules of the closed-door forum.

Some senators were critical of the private meeting, arguing that tech executives should testify in public.

Sen. Josh Hawley, R-Mo., said he would not attend what he said was a “giant cocktail party for big tech. ” Hawley has introduced legislation with Sen. Richard Blumenthal, D-Conn., to require tech companies to seek licenses for high-risk AI systems.

“I don't know why we would invite all the biggest monopolists in the world to come and give Congress tips on how to help them make more money and then close it to the public,” Hawley said.

Congress has a lackluster track record when it comes to regulating technology, and the industry has grown mostly unchecked by government in the past several decades.

Many lawmakers point to the failure to pass any legislation surrounding social media. Bills have stalled in the House and Senate that would better protect children, regulate activity around elections and mandate stricter privacy standards, for example.

“We don't want to do what we did with social media, which is let the techies figure it out, and we'll fix it later,” Senate Intelligence Committee Chairman Mark Warner, D-Va., said about the AI push.

Schumer said regulation of artificial intelligence will be “one of the most difficult issues we can ever take on,” and ticks off the reasons why: It's technically complicated, it keeps changing and it “has such a wide, broad effect across the whole world,” he said.

But his bipartisan working group — Rounds and Sens. Martin Heinrich, D-N.M., and Todd Young, R-Ind. — is hoping the rapid growth of artificial intelligence will create more urgency.

Rounds said ahead of the forum that Congress needs to get ahead of fast-moving AI by making sure it continues to develop “on the positive side” while also taking care of potential issues surrounding data transparency and privacy.

“AI is not going away, and it can do some really good things or it can be a real challenge,” Rounds said.

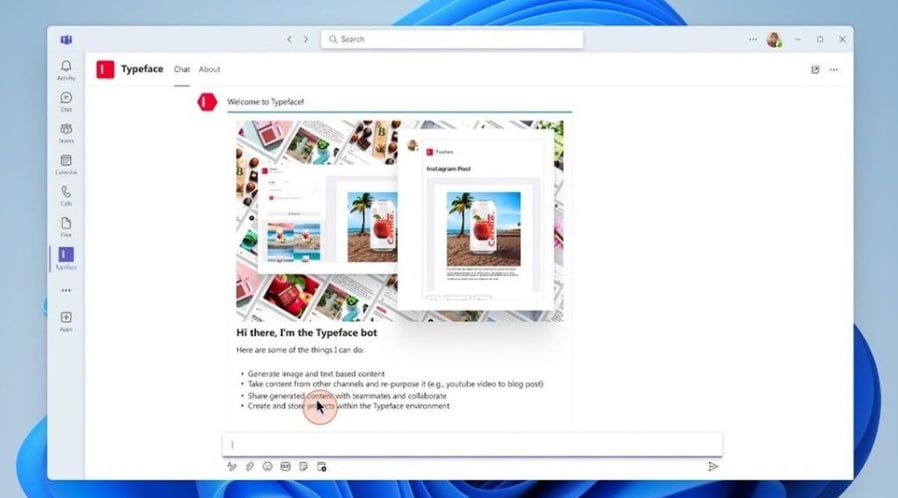

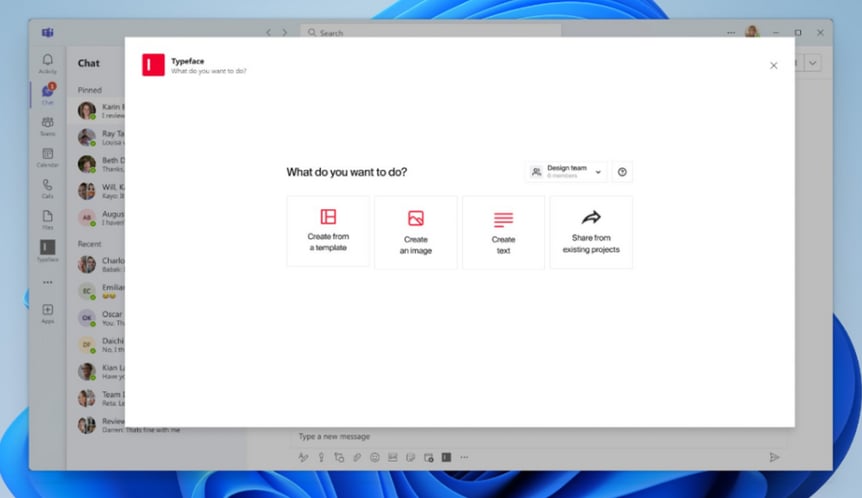

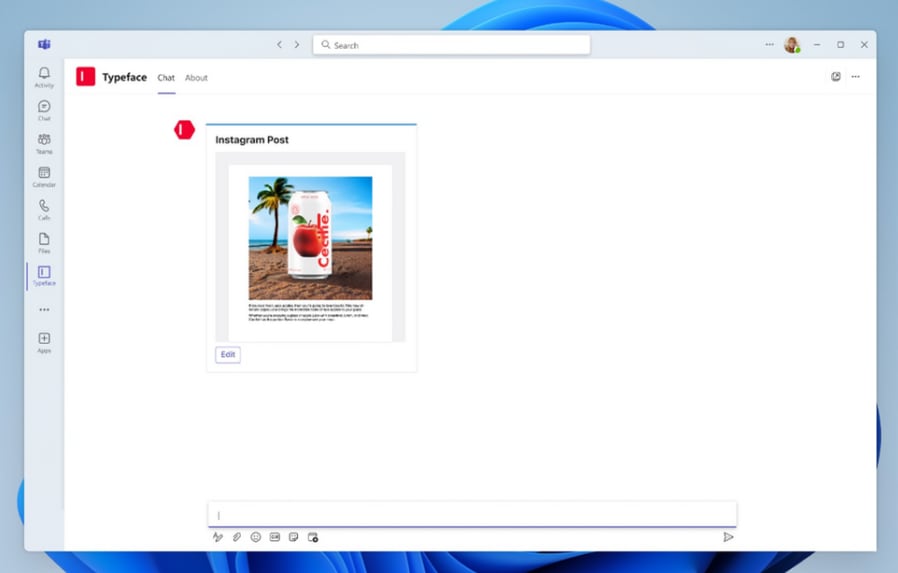

Sparked by the release of ChatGPT less than a year ago, businesses across many sectors have been clamoring to apply new generative AI tools that can compose human-like passages of text, program computer code and create novel images, audio and video. The hype over such tools has accelerated worries over its potential societal harms and prompted calls for more transparency in how the data behind the new products is collected and used.

“You have to have some government involvement for guardrails,” Schumer said. “If there are no guardrails, who knows what could happen.”

Some concrete proposals have already been introduced, including legislation by Sen. Amy Klobuchar, D-Minn., that would require disclaimers for AI-generated election ads with deceptive imagery and sounds. Hawley and Blumenthal's broader approach would create a government oversight authority with the power to audit certain AI systems for harms before granting a license.

In the United States, major tech companies have expressed support for AI regulations, though they don't necessarily agree on what that means. Microsoft has endorsed the licensing approach, for instance, while IBM prefers rules that govern the deployment of specific risky uses of AI rather than the technology itself.

Similarly, many members of Congress agree that legislation is needed but there is little consensus. There is also division, with some members of Congress worrying more about overregulation while others are concerned more about the potential risks. Those differences often fall along party lines.

“I am involved in this process in large measure to ensure that we act, but we don't act more boldly or over-broadly than the circumstances require,” Young said. “We should be skeptical of government, which is why I think it's important that you got Republicans at the table.”

Some of Schumer's most influential guests, including Musk and Sam Altman, CEO of ChatGPT-maker OpenAI, have signaled more dire concerns evoking popular science fiction about the possibility of humanity losing control to advanced AI systems if the right safeguards are not in place.

But for many lawmakers and the people they represent, AI's effects on employment and navigating a flood of AI-generated misinformation are more immediate worries.

Rounds said he would like to see the empowerment of new medical technologies that could save lives and allow medical professionals to access more data. That topic is “very personal to me,” Rounds said, after his wife died of cancer two years ago.

Some Republicans have been wary of following the path of the European Union, which signed off in June on the world's first set of comprehensive rules for artificial intelligence. The EU's AI Act will govern any product or service that uses an AI system and classify them according to four levels of risk, from minimal to unacceptable.

A group of European corporations has called on EU leaders to rethink the rules, arguing that it could make it harder for companies in the 27-nation bloc to compete with rivals overseas in the use of generative AI.

“We've always said that we think that AI should get regulated,” said Dana Rao, general counsel and chief trust officer for software company Adobe. “We've talked to Europe about this for the last four years, helping them think through the AI Act they're about to pass. There are high-risk use cases for AI that we think the government has a role to play in order to make sure they're safe for the public and the consumer.”

Catch all the Latest Tech News, Mobile News, Laptop News, Gaming news, Wearables News , How To News, also keep up with us on Whatsapp channel,Twitter, Facebook, Google News, and Instagram. For our latest videos, subscribe to our YouTube channel.