Apple WWDC 2021: iOS 15 to add support for Live Text, advanced Spotlight search using on-device intelligence

Apple WWDC 2021: New on-device privacy-first features coming to Apple iOS 15 and iPadOS 15, including Live Text and better Spotlight search

Apple WWDC 2021: Alongside announcements of the arrival of upcoming updates to iOS, iPadOS, tvOS and watchOS, Apple on Monday announced at its annual WWDC developer conference that it was launching support for better Spotlight search and a new Live Text feature using improved on-device features.

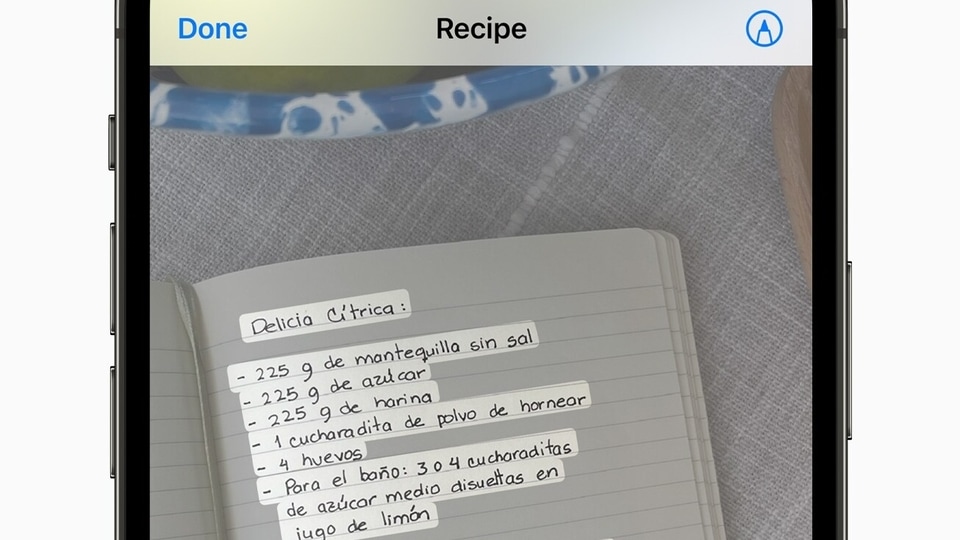

Live Text is a new OCR-like feature announced by Apple that will allow users to point their cameras at a particular object or scene and immediately interact with text visible on the screen. For example, users can basically place a cafe's sign in their camera viewfinder and tap the text of the contact information to place a call. Apple says you can simple copy text from a “whiteboard” by pointing your camera at one, and then paste it in a mail app and sent it to someone.

The new Live Text feature also works for visiting cards and for various kinds of contact information like emails. Apple also says you can use Live Text on “old handwritten family recipes,” which essentially should mean the machine learning could be able to understand cursive writing if it is legible enough. It is worth noting that Google Lens and the Pixel devices have had these features for a couple of years now.

Meanwhile, Visual Look Up on iOS 15 and iPadOS 15 will allow users to point their cameras to look up landmarks, plants and flowers, popular art, breeds of pets and even find books. This also sounds rather similar to another Google feature that already exists, on Google Lens. Apple also says that Spotlight is also getting a lot smarter with the ability to find text and handwriting in your existing photos, which is rather neat.

While it is true that Google Lens has offered features like these for a while now, Apple is touting the privacy benefits of the Live Text feature and the Visual Look Up feature to identify text on the camera and in existing images. Since Apple will be doing all the processing work on the iPhone or iPad that you're on, using on-device intelligence, it looks like none of the information about those images or what you see on your camera viewfinder will ever leave your device.

Catch all the Latest Tech News, Mobile News, Laptop News, Gaming news, Wearables News , How To News, also keep up with us on Whatsapp channel,Twitter, Facebook, Google News, and Instagram. For our latest videos, subscribe to our YouTube channel.