Elon Musk-led Twitter ripe for a misinformation avalanche

Elon Musk-led Twitter is showing signs of being vulnerable to misinformation.

View all Images

View all ImagesSeeing might not be believing going forward as digital technologies make the fight against misinformation even trickier for embattled social media giants.

In a grainy video, Ukrainian President Volodymyr Zelenskyy appears to tell his people to lay down their arms and surrender to Russia. The video — quickly debunked by Zelenskyy — was a deep fake, a digital imitation generated by artificial intelligence (AI) to mimic his voice and facial expressions.

High-profile forgeries like this are just the tip of what is likely to be a far bigger iceberg. There is a digital deception arms race underway, in which AI models are being created that can effectively deceive online audiences, while others are being developed to detect the potentially misleading or deceptive content generated by these same models. With the growing concern regarding AI text plagiarism, one model, Grover, is designed to discern news texts written by a human from articles generated by AI.

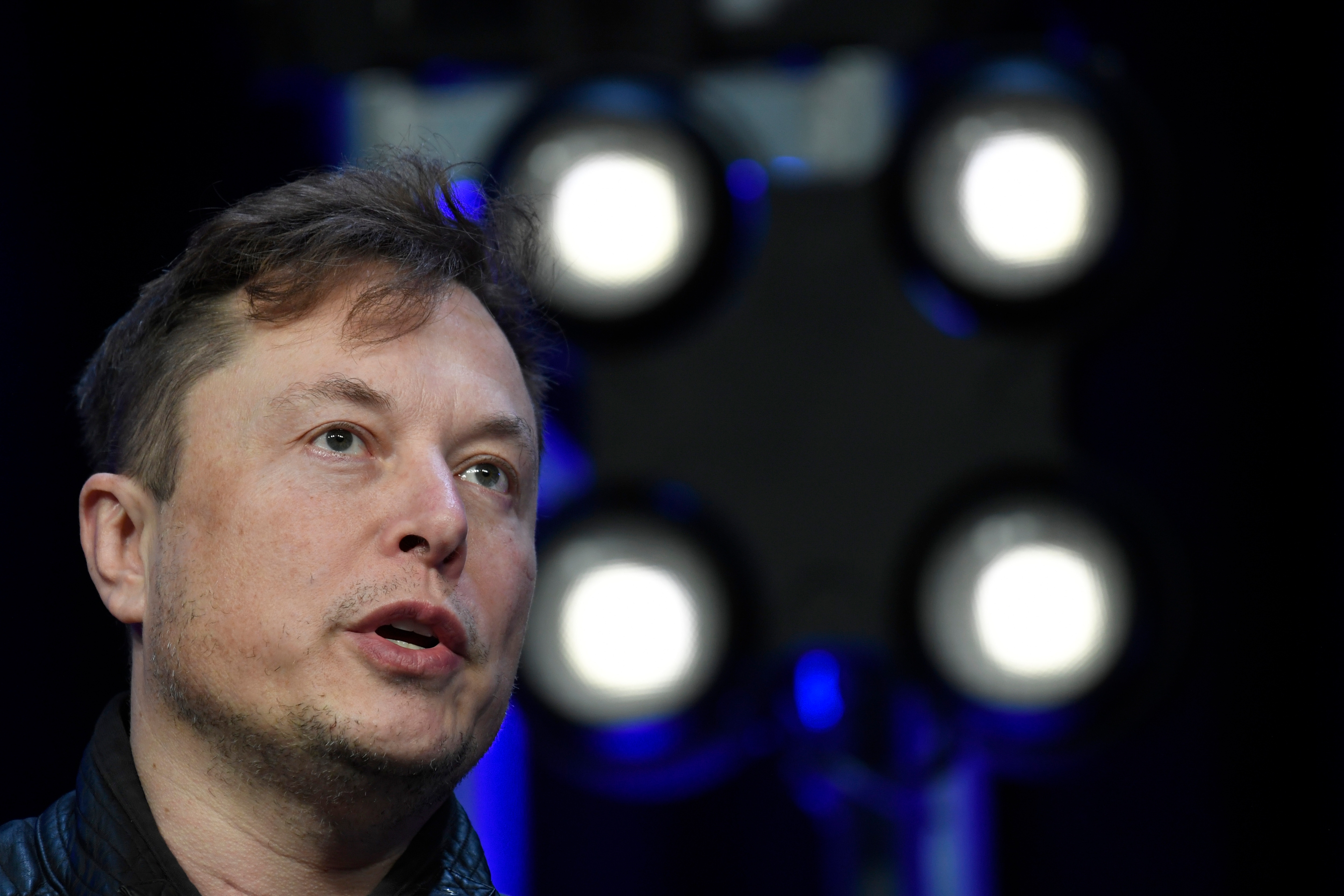

As online trickery and misinformation surges, the armour that platforms built against it are being stripped away. Since Elon Musk's takeover of Twitter, he has trashed the platform's online safety division and as a result misinformation is back on the rise.

Musk, like others, looks to technological fixes to solve his problems. He's already signalled a plan for upping use of AI for Twitter's content moderation. But this isn't sustainable nor scalable, and is unlikely to be the silver bullet. Microsoft researcher Tarleton Gillespie suggests: “automated tools are best used to identify the bulk of the cases, leaving the less obvious or more controversial identifications to human reviewers".

Some human intervention remains in the automated decision-making systems embraced by news platforms but what shows up in newsfeeds is largely driven by algorithms. Similar tools act as important moderation tools to block inappropriate or illegal content.

The key problem remains that technology 'fixes' aren't perfect and mistakes have consequences. Algorithms sometimes can't catch harmful content fast enough and can be manipulated into amplifying misinformation. Sometimes an overzealous algorithm can also take down legitimate speech.

Beyond its fallibility, there are core questions about whether these algorithms help or hurt society. The technology can better engage people by tailoring news to align with readers' interests. But to do so, algorithms feed off a trove of personal data, often accrued without a user's full understanding.

There's a need to know the nuts and bolts of how an algorithm works — that is opening the ‘black box'.

But, in many cases, knowing what's inside an algorithmic system would still leave us wanting, particularly without knowing what data and user behaviours and cultures sustain these massive systems.

One way researchers may be able to understand automated systems better is by observing them from the perspective of users, an idea put forward by scholars Bernhard Rieder, from the University of Amsterdam, and Jeanette Hofmann, from the Berlin Social Science Centre.

Australian researchers also have taken up the call, enrolling citizen scientists to donate algorithmically personalised web content and examine how algorithms shape internet searches and how they target advertising. Early results suggest the personalisation of Google Web Search is less profound than we may expect, adding more evidence to debunk the ‘filter bubble' myth, that we exist in highly personalised content communities. Instead it may be that search personalisation is more due to how people construct their online search queries.

Last year several AI-powered language and media generation models entered the mainstream. Trained on hundreds of millions of data points (such as images and sentences), these ‘foundational' AI models can be adapted to specific tasks. For instance, DALL-E 2 is a tool trained on millions of labelled images, linking images to their text captions.

This model is significantly larger and more sophisticated than previous models for the purpose of automatic image labelling, but also allows adaption to tasks like automatic image caption generation and even synthesising new images from text prompts. These models have seen a wave of creative apps and uses spring up, but concerns around artist copyright and their environmental footprint remain.

The ability to create seemingly realistic images or text at scale has also prompted concern from misinformation scholars — these replications can be convincing, especially as technology advances and more data is fed into the machine. Platforms need to be intelligent and nuanced in their approach to these increasingly powerful tools if they want to avoid furthering the AI-fuelled digital deception arms race.

Catch all the Latest Tech News, Mobile News, Laptop News, Gaming news, Wearables News , How To News, also keep up with us on Whatsapp channel,Twitter, Facebook, Google News, and Instagram. For our latest videos, subscribe to our YouTube channel.