Researchers find the answer to detecting nasty deepfakes is hidden in your eyes

Deepfakes may be terrifying, but artificial intelligence that helps make them might also hold the key to identifying which videos are deepfakes and which are real.

A few years ago, deepfakes - the technology that lets you superimpose one face on top of another person in a video with matching facial expressions and reactions was not considered much of a threa. But those same deepfakes have now become so uncannily accurate, that spotting a fake has become extremely tough.

In order to prevent these deepfake videos from being misused in election campaigns or used against unsuspecting former partners, a group of computer scientists have built up a system that can identify and detect deepfake videos from portrait images, according to a report by The Next Web.

Don't miss: Your smartphone would soon be able to identify deepfakes even before they are made

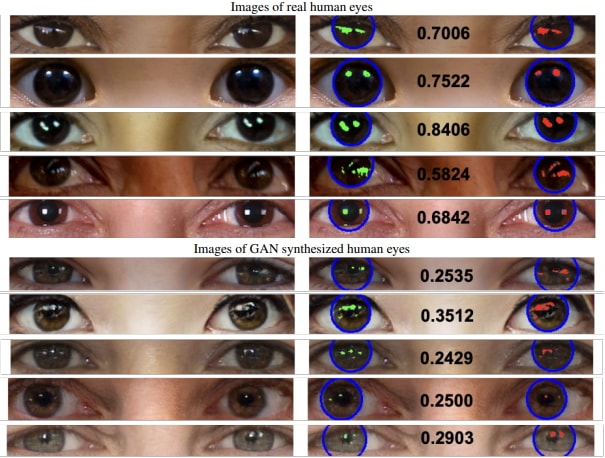

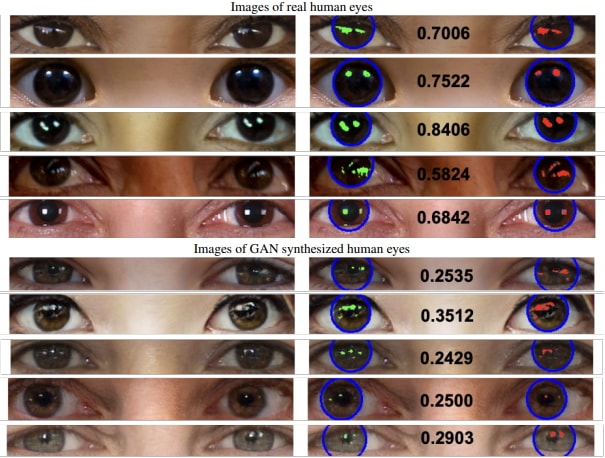

GANs, or Generative Adversarial Networks, are useful for a lot of artificial intelligence-related purposes, but deepfakes are the most popular or well-known use so far. The researchers found that GANs were unable to recreate one aspect of a person's face, in connection with their eyes.

The computer scientists found that when a camera captures a ‘real' person, their corneas, which reflect the light bouncing off them, are illuminated. These areas have a certain pattern that are mostly identical in both eyes. Fortunately, it appears that GANs cannot recreate these reflections accurately, which means the patterns (or even locations) of the reflections are inconsistent.

By studying the light pattern on the subject's eyes have similar reflective patterns and looking for differences between the two, the system can check with up to an impressive 94 percent accuracy, the report states. However, these can be “fixed” with editing tools after a deepfake is generated, but a sharp observer might still be able to find subtle edits to the video.

Also read: Researchers introduce first artificial intelligence tool to detect COVID-19 probability

There are other limitations to the system, like camera positions - if you're using the tool on a video of a person who was recorded from one side, the tool won't work. Similarly, if the source of light isn't prominent enough, finding a clear pattern to check for differences may not be very easy, according to the report. The entire research paper titled “Exposing gan-generated faces using inconsistent corneal specular highlights” can be accessed at this link.

Catch all the Latest Tech News, Mobile News, Laptop News, Gaming news, Wearables News , How To News, also keep up with us on Whatsapp channel,Twitter, Facebook, Google News, and Instagram. For our latest videos, subscribe to our YouTube channel.