ByteDance unveils OmniHuman-1 - an AI model that can generate realistic videos from photos- Details

ByteDance has introduced OmniHuman-1, an AI model that creates realistic videos from photos and audio, which aims to enhance video generation with advanced facial and motion synchronisation.

ByteDance, the parent company of TikTok, has introduced a new artificial intelligence model called OmniHuman-1. This model is designed to generate realistic videos using photos and sound clips. The development follows OpenAI's decision to expand access to its video-generation tool, Sora, for ChatGPT Plus and Pro users in December 2024. Google DeepMind also announced its Veo model last year, capable of producing high-definition videos based on text or image inputs. However, neither OpenAI nor Google's models, which convert photos into videos, are publicly available.

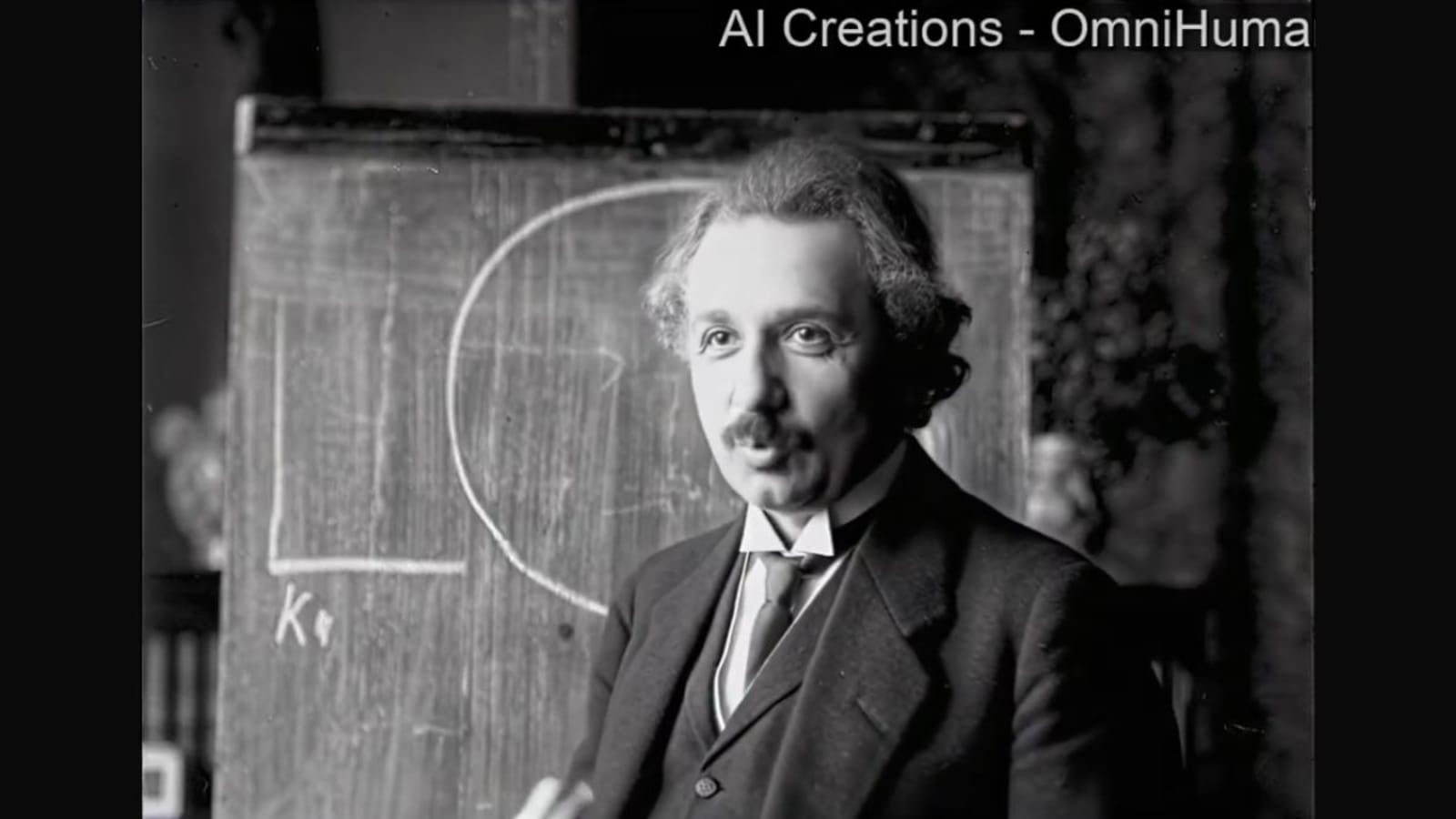

A technical paper (reviewed by the South China Morning Post) highlights that OmniHuman-1 specialises in generating videos of individuals speaking, singing, and moving. The research team behind the model claims that its performance surpasses existing AI tools that generate human videos based on audio. Although ByteDance has not released the model for public use, sample videos have circulated online. One of these showcases a 23-second clip of Albert Einstein appearing to give a speech, which has been shared on YouTube.

Also read: Amazon to launch AI-powered Alexa on February 26- Here's what we know so far

Insights from ByteDance Researchers

ByteDance researchers, including Lin Gaojie, Jiang Jianwen, Yang Jiaqi, Zheng Zerong, and Liang Chao, have detailed their approach in a recent technical paper. They introduced a training method that integrates multiple datasets, combining text, audio, and movement to improve video-generation models. This strategy addresses scalability challenges that researchers have faced in advancing similar AI tools.

Also read: Google says commercial quantum computing will take off in just 5 years: What it means

The research highlights that this method enhances video generation without directly referencing competing models. By mixing different types of data, the AI can generate videos with varied aspect ratios and body proportions, ranging from close-up shots to full-body visuals. The model produces detailed facial expressions synchronised with audio, along with natural head and gesture movements. These features could lead to broader applications in various industries.

Also read: ChatGPT maker OpenAI now has a new logo to match its rebranding. This is what it looks like

Among the sample videos released, one features a man delivering a TED Talk-style speech with hand gestures and lip movements synchronised with the audio. Observers noted that the video closely resembles a real-life recording.

Catch all the Latest Tech News, Mobile News, Laptop News, Gaming news, Wearables News , How To News, also keep up with us on Whatsapp channel,Twitter, Facebook, Google News, and Instagram. For our latest videos, subscribe to our YouTube channel.